Invitation to attend the discussion of the Master's thesis submitted by researcher / Margo Sabry Shenouda

Congratulations from Professor Dr. Tayseer Hassan Abdel Hamid to researcher Mahmoud Qutb Abdo Diab on winning the award for best research paper.

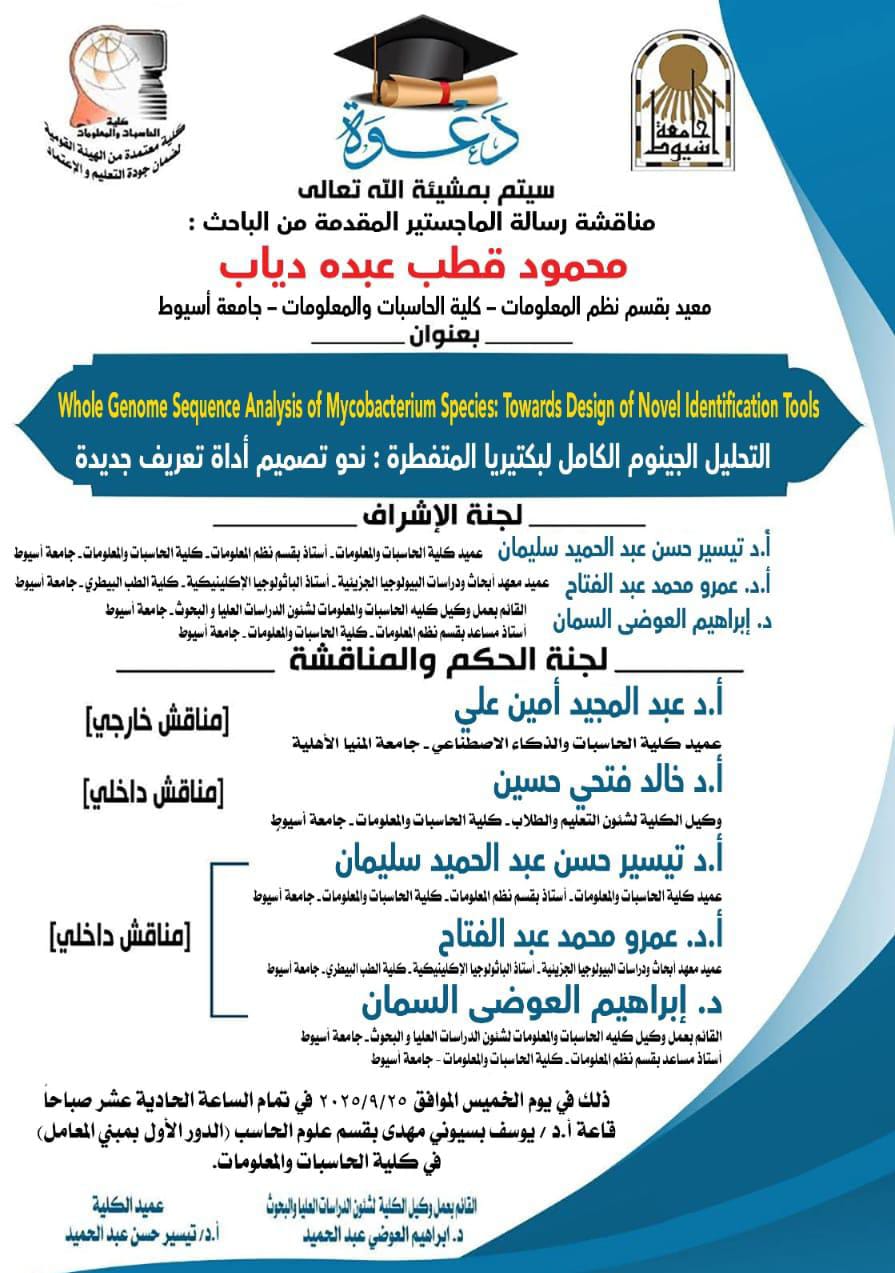

Invitation to attend the discussion of the Master's thesis submitted by researcher Mahmoud Qutb Abdo Diab

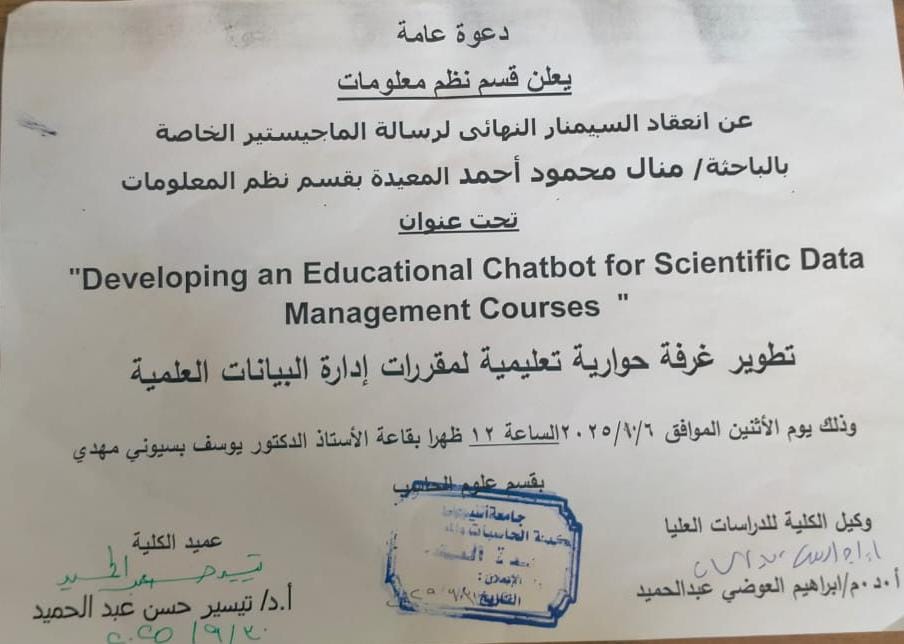

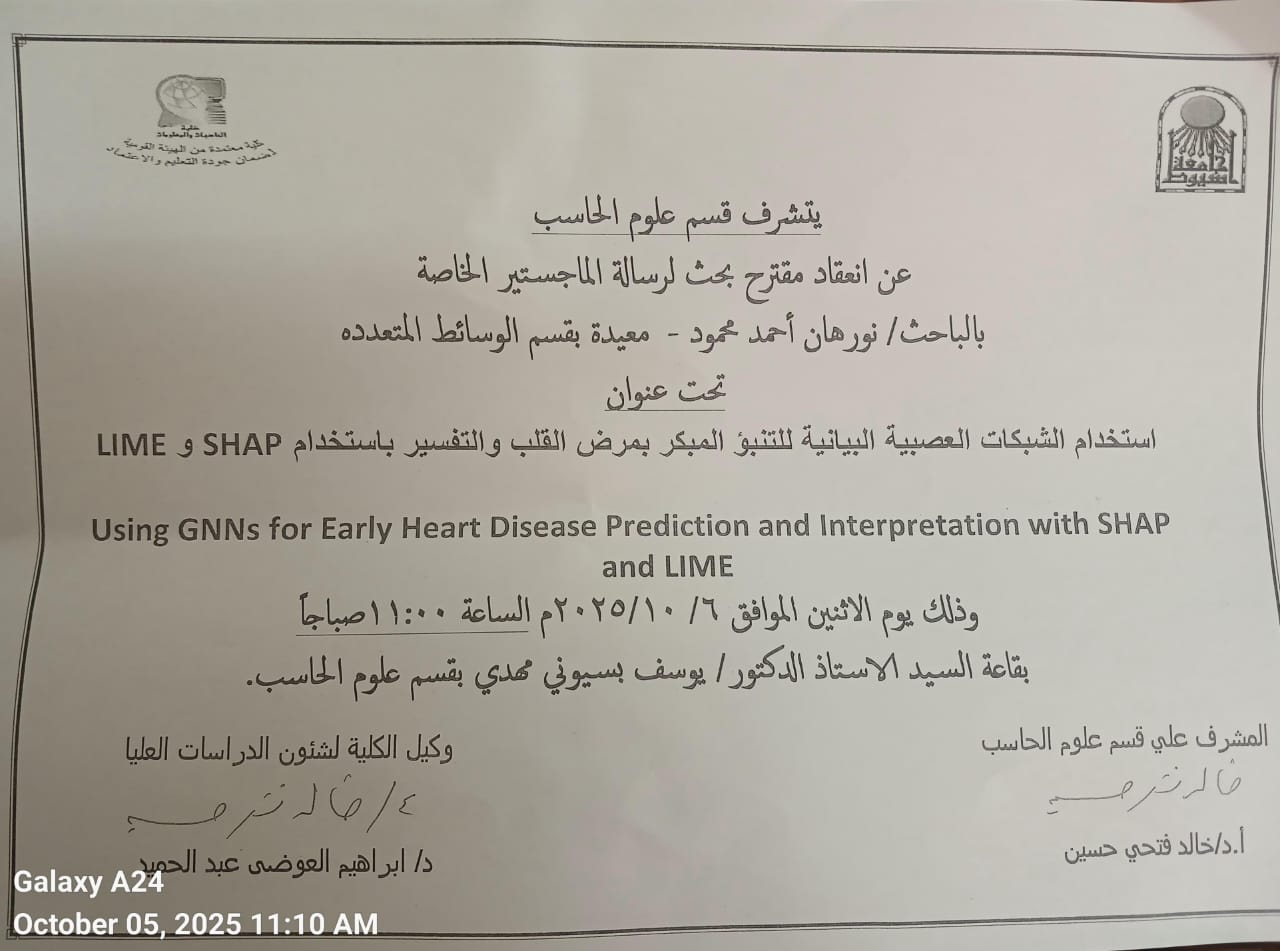

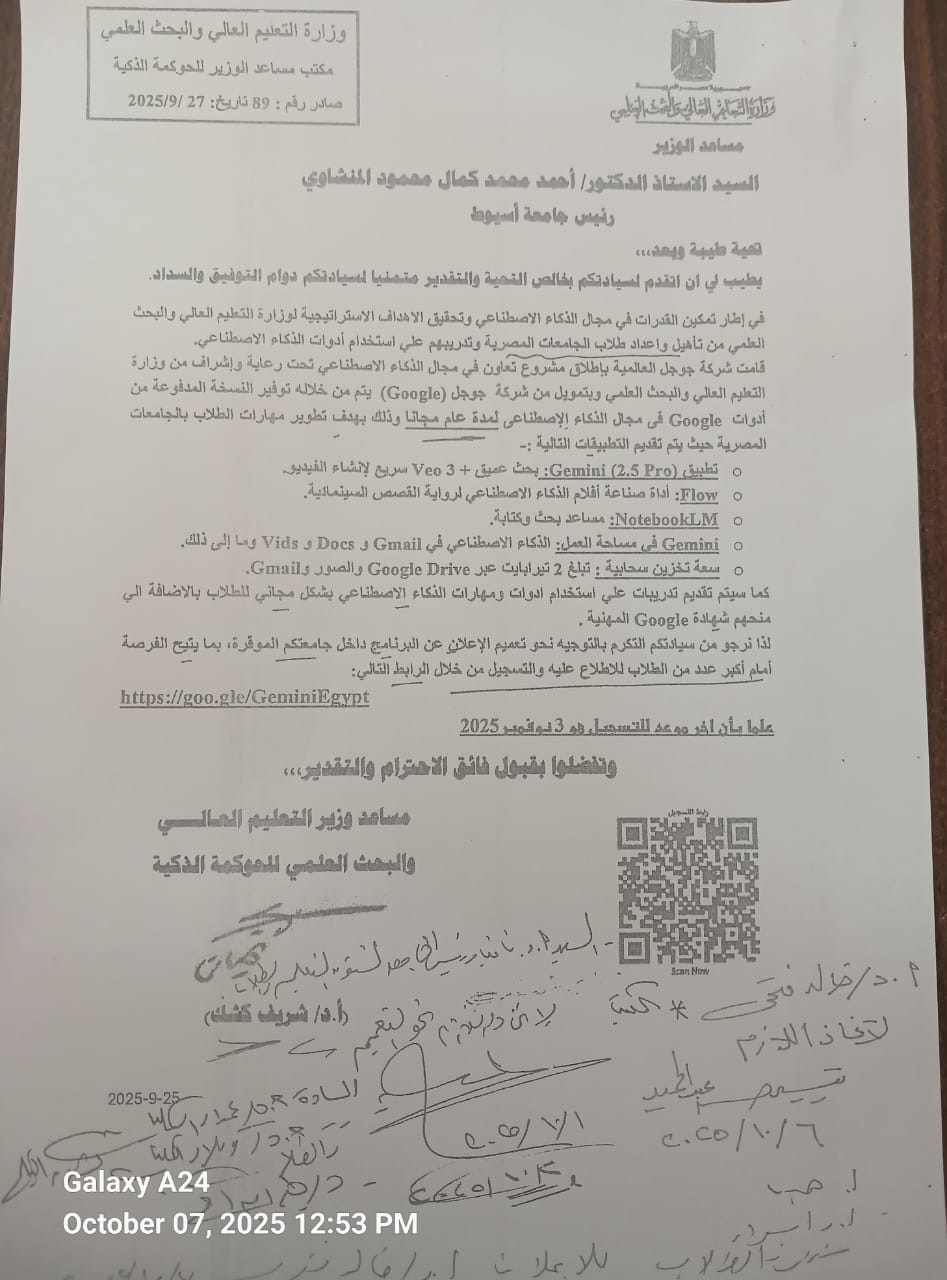

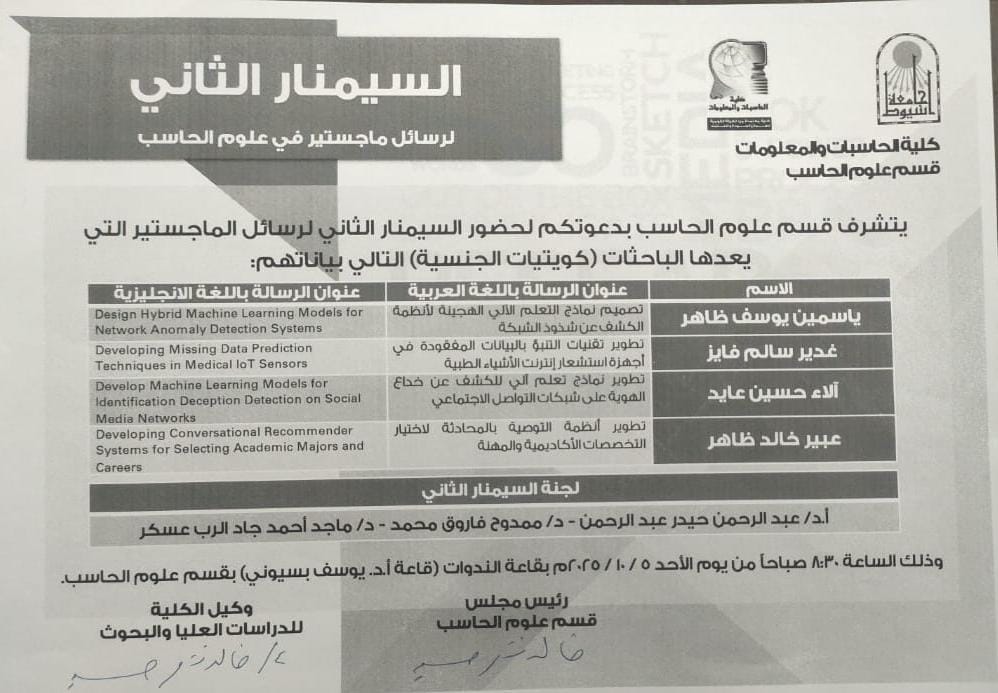

Invitation to attend the second seminar for Master's theses in the Computer Science Department

news category

Postgraduates