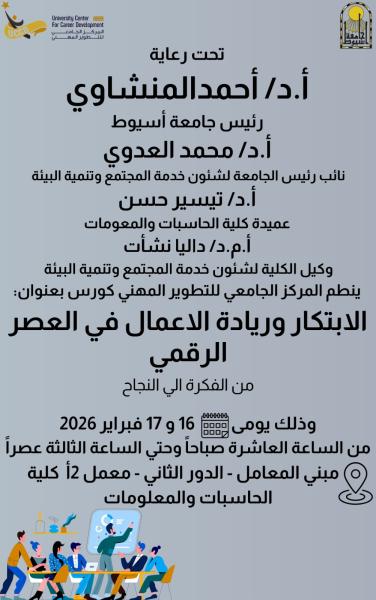

Invitation to attend the Innovation and Entrepreneurship in the Digital Age course

An invitation to discuss the master’s thesis of researcher Mohamed Hassan Omar Mohamed in the Information Technology Departmen

An invitation to hold a validity seminar for the master’s thesis of researcher Samar Abdel Azim Abdel Hadi, Department of Computer Science

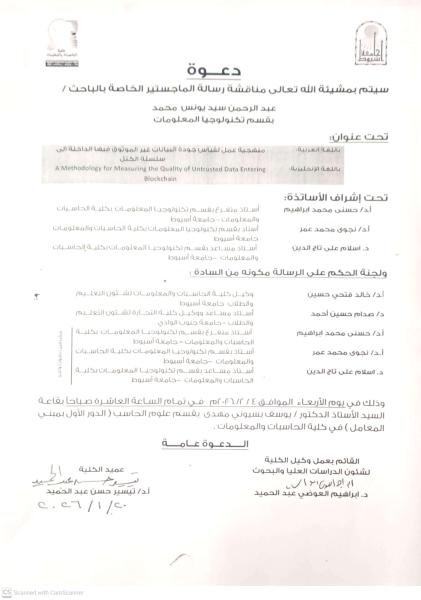

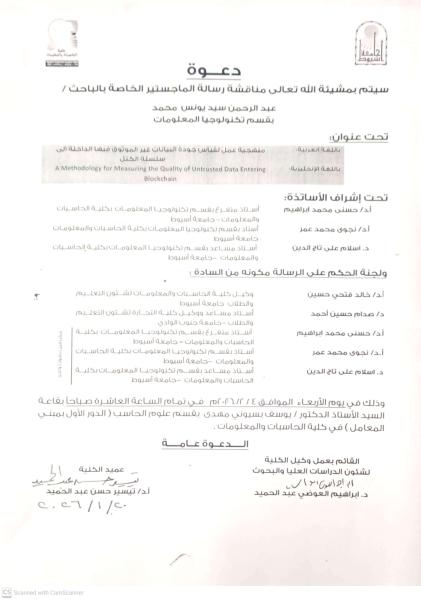

An invitation to discuss the master’s thesis of researcher Abdul Rahman Sayed Younis Muhammad in the Information Technology Department

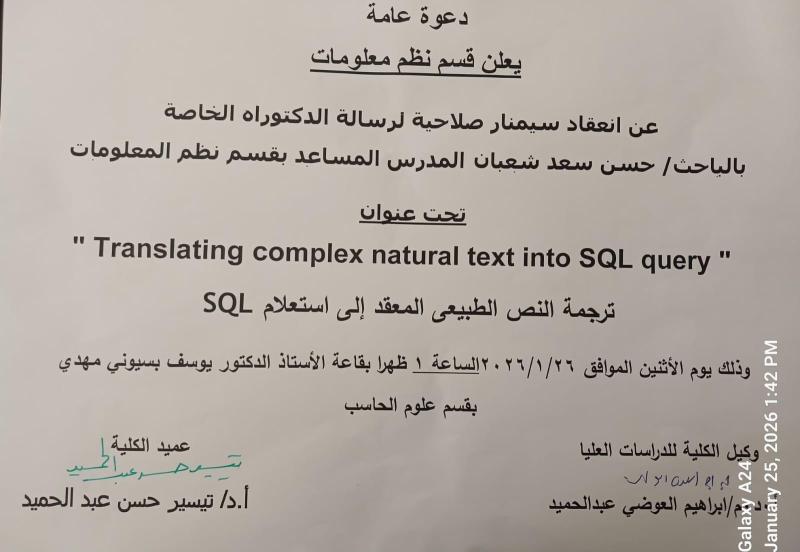

Announcement of the PhD dissertation validity seminar for researcher/Hassan Saad Shaaban, Assistant Lecturer in the Information Systems Department

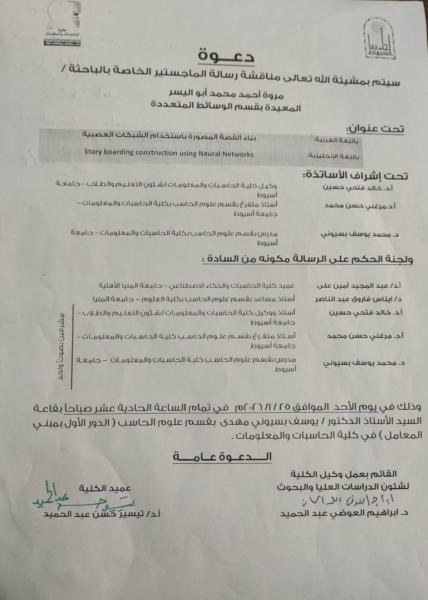

Announcement of the discussion of the Master's thesis of researcher/ Marwa Ahmed Mohamed Abu Al-Yusr, teaching assistant in the Multimedia Department

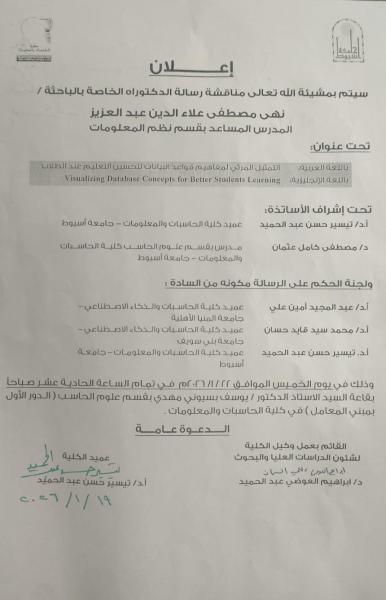

Announcement of the discussion of the doctoral dissertation of researcher/ Noha Mustafa Alaa El-Din Abdel Aziz, Assistant Lecturer in the Department of Information Systems

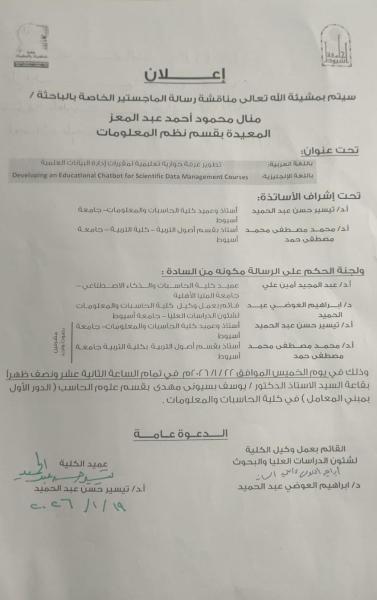

Announcement of the discussion of the Master's thesis of researcher/ Manal Mahmoud Ahmed Abdel-Moaz, teaching assistant in the Information Systems Department

G-SQL: A Schema-Aware and Rule-Guided Approach for Robust Natural Language to SQL Translation

Research Authors

Hassan S Shalaan, Taysir Hassan A Soliman, Amr M Abdelaziz

Research Date

Research Department

Research Journal

IEEE Access

Research Member

Research Year

2025